[Graphics]Transparency Rendering

keywords: Graphics, Transparency Rendering, Semi-transparency, Translucency, Translucent Shader, Early Z, Hi-Z, Discard, Depth Test, Depth Writing

Documents

overview of a few methods to render with transparency in OpenGL:

- Alpha Testing

- Sorting and Blending

- Depth Peeling

- Alpha to Coverage

Origin: OpenGL ES2 Alpha test problems

Blending

https://learnopengl.com/Advanced-OpenGL/Blending

Order-independent transparency - LearnOpenGL

https://learnopengl.com/Guest-Articles/2020/OIT/Introduction

Order Independent Transparency In OpenGL 4.x

https://on-demand.gputechconf.com/gtc/2014/presentations/S4385-order-independent-transparency-opengl.pdf

Early Z and Discard on PowerVR

Conclusions:

- Either Early-Z or discard can prevent a fragment from being drawn, so it’s very possible to do one and then the other. But for PowerVR, only one of the two (Early Z and Discard) can be executed at a time.

- Opaque primitives: Early-Z.

- Primitives that pixel depth unknown: Discard (depth test, after fragment shader has executed).

- In Unreal Engine, Masked materials whose

Opacity Maskhas been parameterized, and Opaque materials whosePixel Depth Offsethas been parameterized all are the primitives that need to force enable alpha test.

- In Unreal Engine, Masked materials whose

- Semi-transparent primitives: Alpha Blending.

- The reason why PowerVR(TBDRs) can only execute only one of two(Early Z and Discard):

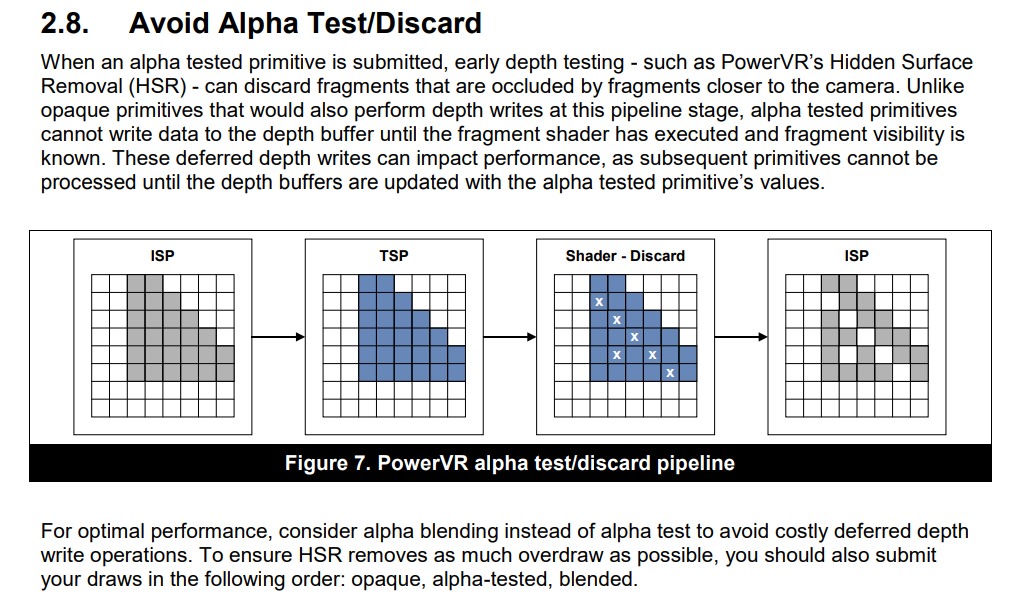

- When an alpha tested primitive is submitted, early depth testing - such as PowerVR’s Hidden Surface Removal (HSR) - can discard fragments that are occluded by fragments closer to the camera. Unlike opaque primitives that would also perform depth writes at this pipeline stage, alpha tested primitives cannot write data to the depth buffer until the fragment shader has executed and fragment visibility is known. These deferred depth writes can impact performance, as subsequent primitives cannot be processed until the depth buffers are updated with the alpha tested primitive’s values.

- To avoid accessing main memory (but accesses faster cache, which saves more power), the discarding logic is bundled with the depth testing logic on hardware, so alpha tested primitives write data to the depth buffer in the deferred step.

- TBRs and TBDRs do blending on-chip (faster, less power-hungry than going to main memory) so blending should be favoured for transparency. The usual procedure to render blended polygons correctly is to disable depth writes (but not tests) and render tris in back-to-front depth order (unless the blend operation is order-independent)

References:

PowerVR Performance Recommendations - The Golden Rules

Quoted from Is discard bad for program performance in OpenGL? - stackoverflow.com

– gmaclachlan:

“discard” is bad for every mainstream graphics acceleration technique - IMR, TBR, TBDR. This is because visibility of a fragment (and hence depth) is only determinable after fragment processing and not during Early-Z or PowerVR’s HSR (hidden surface removal) etc. The further down the graphics pipeline something gets before removal tends to indicate its effect on performance; in this case more processing of fragments + disruption of depth processing of other polygons = bad effect.

If you must use discard make sure that only the tris that need it are rendered with a shader containing it and, to minimise its effect on overall rendering performance, render your objects in the order: opaque, discard, blended.

Incidentally, only PowerVR hardware determines visibility in the deferred step (hence it’s the only GPU termed as “TBDR”). Other solutions may be tile-based (TBR), but are still using Early Z techniques dependent on submission order like an IMR does. TBRs and TBDRs do blending on-chip (faster, less power-hungry than going to main memory) so blending should be favoured for transparency. The usual procedure to render blended polygons correctly is to disable depth writes (but not tests) and render tris in back-to-front depth order (unless the blend operation is order-independent). Often approximate sorting is good enough. Geometry should be such that large areas of completely transparent fragments are avoided. More than one fragment still gets processed per pixel this way, but HW depth optimisation isn’t interrupted like with discarded fragments.

– Nicol Bolas:

“visibility of a fragment(and hence depth) is only determinable after fragment processing and not during Early-Z or PowerVR’s HSR (hidden surface removal) etc.” That’s not entirely true. Either Early-Z or discard can prevent a fragment from being drawn. So it’s very possible to do one and then the other. PowerVR can’t for the reasons you and I stated. But traditional renderers certainly can. If they don’t, it would only be because the discarding logic is bundled with the depth testing logic. That’s a hardware design issue, not an algorithmic necessity.

– gmaclachlan:

I missed out the case where a discard fragment is rendered ‘behind’ existing geometry :S - is that what you mean? Both early Z and HSR will reject fragments without fragment processing in that situation. Even then, Early-Z requires the obscuring fragments to be rendered before the obscured, discard ones or the discard shader still needs to be run for those fragments to determine depth. HSR is not dependent on submission order in this case - at the end of a frame, if discard fragments are behind opaque fragments then they don’t get processed by PowerVR.

– Nicol Bolas:

A fragment can fail to be rendered for many reasons. Depth test is one, discard is another. Early-Z simply performs the depth test first. The only reason discard would interfere with this is if the discard logic were tied into the depth test logic in the hardware. Just because something passes the depth test does not mean that it will pass everything. If the depth and discard are coupled, it is only because hardware is built that way, not because it has to be done that way by the algorithm. You should be able to do Early-Z tests and still later discard.

– gmaclachlan:

Of course you can, but it’s slower that way because depth information isn’t determined until later in the pipeline. ‘discard’ in the shader (with the usual render state) affects the depth write value of a fragment so it affects the performance of subsequent depth testing in hardware. This is what makes it different from blending.

– Nicol Bolas:

Depth writing would happen at the same time as color writing. discard, like the depth test or stencil test, would affect both depth and color writing. Doing the depth test before the fragment shader does not require that the depth tested is ultimately written. Now, certain Z-culling techniques (Hi-Z, Hierarchial-Z, etc) do require that. So you can’t use them with discard. But those are different from Early-Z, which is simply doing the depth test per-fragment before the fragment shader.

There is evidence that some hardware can do depth tests without depth writes. This can be found with extensions like AMD_conservative_depth, which allows one to say how they’re changing the depth value they’re writing. This allows hardware to do the depth test early if it knows that you’re not changing the depth in a way that will make that test invalid. That wouldn’t work if the hardware wrote the depth it tested against; it still has to write the actual depth computed in the shader. So Early-Z tests are not linked to Early-Z writes.

– gmaclachlan:

discard tri goes through pipeline, passes early-Z, Hi-Z etc., goes to fragment processing, depth is determined with colour and written out. But… if a fragment is rendered to the same x-y after this then the Early Z etc. has to wait for the discard fragment’s depth to be processed and written before it can perform the depth test with up-to-date data (or potentially process obscured fragments). Hence performance hit.BTW I think I mention disabling depth write (but not tests) above.

Examples

Demonstrates seven different techniques for order-independent transparency in Vulkan.

https://github.com/nvpro-samples/vk_order_independent_transparency

“Mistakes are, after all, the foundations of truth, and if a man does not know what a thing is, it is at least an increase in knowledge if he knows what it is not. ” ― Carl G. Jung